Aljibe is a DDEV add-on that provides tools and a curated base for developing Drupal projects. It's what we use at Metadrop as the foundation for every project. Although it's tailored to our needs, making it opinionated, it follows the Drupal way, and we think it can be useful for many other developers and teams.

It's built as a set of DDEV add-ons, with Aljibe being the main one. Many of the tools it offers are provided by a DDEV add-on that can be used alone if you don't want to use Aljibe. Check the plug-ins we provide in our our DDEV Add-on Registry.

Additionally, Aljibe uses Metadrop/drupal-dev to declare the dependencies of all the tools it provides. It is jut a Composer package, not a DDV add-on. You can use it directly, if you wish, or gather inspiration from it to create your own project base stack.

The main goal of Aljibe, aside from speeding up project kick-offs, is to provide a solid base with lots of QA tools for high-quality projects. That's the real Aljibe focus: quality.

Among the tools it provides, you'll find Behat, BackstopJS, PHPStan, PHP CodeSniffer, Unlighthouse, multiple linters, a place for documentation, and some tools to ease Drupal actions, such as quickly setting up a site (or multisite).

One of the approaches we follow with Aljibe is to put all project files in the project's repository. This includes not only the code but also technical documentation, helper scripts, testing configuration and data, and everything you might need to start hacking on the project. This avoids having project information scattered across different tools and places.

Let's see what you can find in Aljibe.

A folder tree

Aljibe provides a folder tree for all the usual Drupal requirements. When you create a project using Aljibe, you get the following folders:

- backups: A folder for storing any manual backups you might need during development. This folder is ignored by Git.

- config: A folder for Drupal site configurations. Inside, you'll find a

commonfolder for the main configuration and anenvsfolder for configuration splits. Have a look at its README.md file for more information on how to organise your Drupal configuration in more complex scenarios. - docs: A folder for project documentation. See the MkDocs section for more information.

- drush: This is where the project's Drush configuration lives, including aliases, policies, and custom Drush commands

- patches: It's recommended to download patches and commit them locally, and this folder is intended for storing those downloaded patches.

- postman: A folder with Postman collections and environments to be run using Newman (see below).

- private-files: This contains the Drupal private files. Private files shouldn't be stored under the website's document root, so they're stored here instead.

- recipes: A place to store recipes for this project. If you're not familiar with Drupal recipes, now is the time to investigate!

- reports: Some tools provided by Aljibe generate reports, which are usually placed here.

- scripts: If your project needs a custom script, this is where you can store it.

- tests: Aljibe is very test-focused. Test tool configuration and test data are stored here.

This set of folders covers most Drupal requirements while following the Drupal way (e.g., folders that should be outside the document root, a folder for the new Drupal recipes, reports, and so on).

Static code analysis

Here, we'll start with actively ensuring a quality project. Aljibe provides many tools for static code analysis. Because it's not trivial to run each one, Aljibe provides two easy ways to run all the analysis tools: GrumPHP and phpqa.

Both tools serve as a wrapper for multiple other tools, but each is run differently and in different situations.

GrumPHP

The first way is automatic and runs before every commit thanks to GrumPHP. It checks every commit to make sure it complies with several policies: it runs PHPStan, PHP Code Sniffer, and several linters on newly added code. It also checks composer.json, image file sizes, and Git commit messages, among other things. And yes, it can be annoying, but it's the first barrier to keeping bad code out of your project. You can always skip it, but you should feel bad about doing so. It might be grumpy, but it takes care of the project.

Aljibe already adds the Git hook to run GrumPHP on your commits, straight out of the box.

phpqa

This is similar to GrumPHP in the sense that it runs many static code analysers on your code. However, this tool is intended to be used in CI/CD or manually on your local developer machine. Again, it uses PHPStan, PHP CodeSniffer, linters, PHPMD, etc.

You can run phpqa with just one command.

ddev exec phpqaThe analysers

Let's see some of the tools GrumPHP and phpqa use under the hood.

PHPStan

This tool analyses your code and finds possible bugs . I'd say it's a must-have and is, in fact, used by default in the GitLab Drupal Templates.

To run PHPStan on your own, type:

ddev exec phpstanIt's configured to check custom code because contributed code is supposed to be checked elsewhere. However, you can run it on any piece of code if you want.

To analyse a certain folder, add its path:

ddev exec phpstan analyze /path/to/folderBy default, PHPStan is configured at level 8. This is quite strict, but all the projects we develop from scratch use this level. Sometimes it can be a little annoying, but the benefits are worth the effort.

phpcs

PHP Code Sniffer checks Drupal coding standards, even automatically fixing some of them, and it's preconfigured so you don't need to do anything.

Apart from being run by GrumPHP and phpqa, you can run it manually:

ddev exec phpcsThis will check your custom code.

If you want to check a specific folder, add its path as the final parameter:

ddev exec phpcs /path/to/folderPHPMD

PHP Mess Detector analyses code, looking for possible bugs, suboptimal code, overcomplicated expressions, and unused parameters, methods, and properties.

If you need to run it yourself instead of waiting for GrumPHP or running PHPQA, you can call it with this command:

ddev exec phpmd /path/to/folder/or/file format rulesetUse -h to see all possible report formats (how the tool outputs results) and ruleset. For the rulesets, you can provide more than one by separating them with commas.

Linters

Linters for YAML and JSON files are run by GrumPHP and PHPQA. You can, of course, run them manually as well. Take a look at the vendor/bin folders and experiment with the executables you find there.

Testing tools

As Aljibe is focused on quality, it not only provides static code analysers but also complete testing tools. All of these tools can be run from the command line or in a CI/CD system. In fact, we have all these tools integrated into the automatic QA workflow in our projects.

PHPUnit

This is the standard Drupal testing tool, but unfortunately, you need to configure it before using it. Aljibe takes care of this and provides a configuration file that works out of the box.

ddev exec phpunitThis command runs all PHPUnit tests, including core, which is probably not what you want.

To run tests on a specific module, provide its path:

ddev exec phpunit /path/to/moduleBehat

Behat is a Behaviour Driven Development (BDD) tool that allows you to write tests describing how an application should behave based on user interactions (User Stories). Tests are written in natural language, making them easier to understand than pure code tests. Each test consists of several steps, and each step can either perform an action on the site (visit a URL, create content, click buttons and links, wait for AJAX, run cron, and many other actions) or check something (a text is present, an element is visible, the current URL matches a certain expression, and many more).

Additionally, some libraries are included to improve Drupal support, adding several Behat testing steps for the most common use cases, such as logging in as a user with a particular role, creating test content, or finding expressions within specific content regions.

The librares included are:

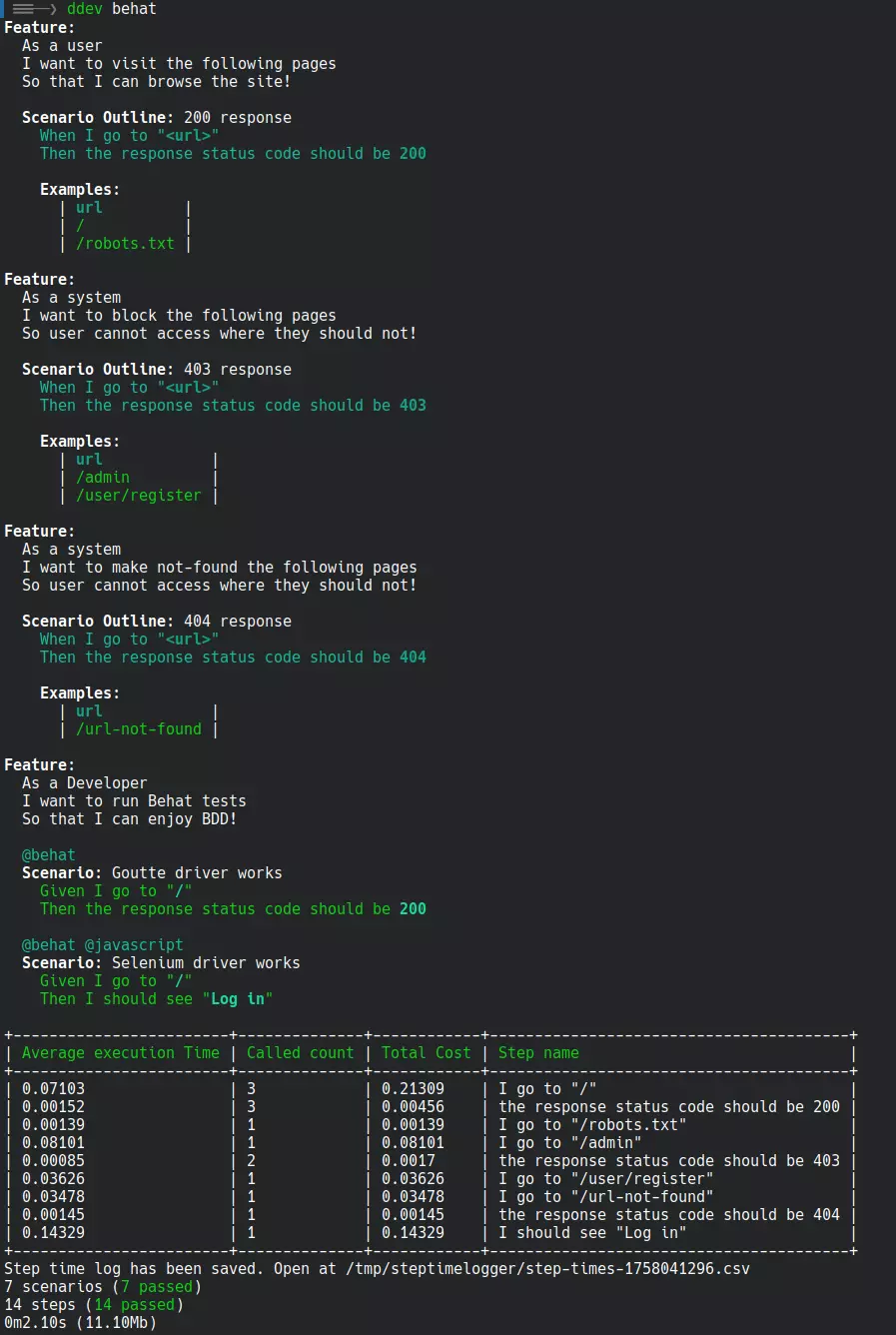

Behat is preconfigured, so you can run the minimal provided tests with the following command:

ddev behatHere is an example the basic tests provided by Aljibe:

Note that we've added a Behat plug-in to display the time consumed by each step. Tests can take a long time to run, and it's useful to see optimisation opportunities as part of the test output.

BackstopJS

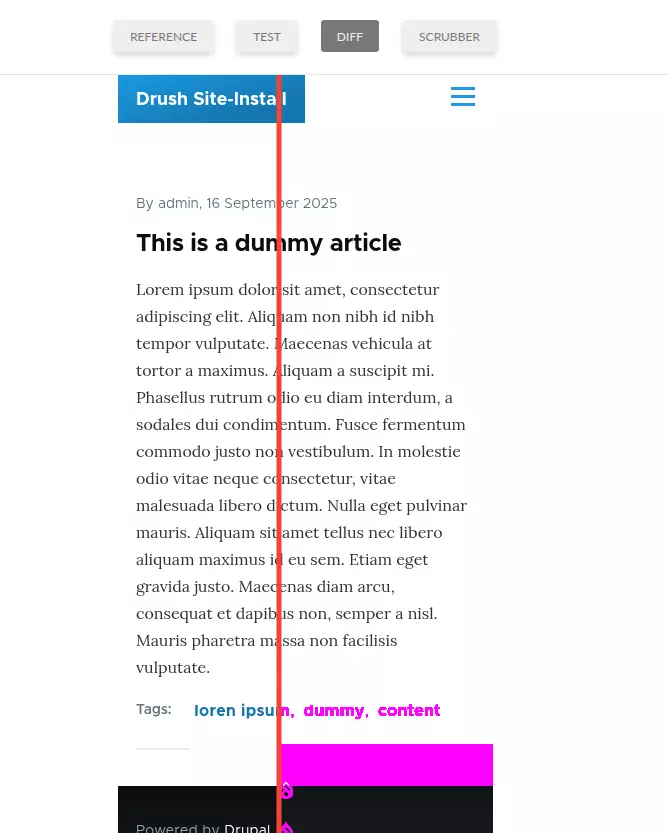

BackstopJS is a visual regression tool. It compares a set of screenshots from a list of URLs with a set of reference screenshots. If there's a significant difference, an error is triggered. You can also compare parts of the rendered web page, such as elements specified using CSS selectors. This tool detects errors in CSS, rendering, or even content that won't be detected by testing tools that check functionality. It also provides a report to easily spot the problem.

A BackstopJS report screenshot displaying an error because article tags disspeared.

BackstopJS is pre-configured. To generate the reference images, run this command:

ddev backstopjs referenceThen, to generate new screenshots and compare them to the reference images, run this command:

ddev backstopjs testPa11y

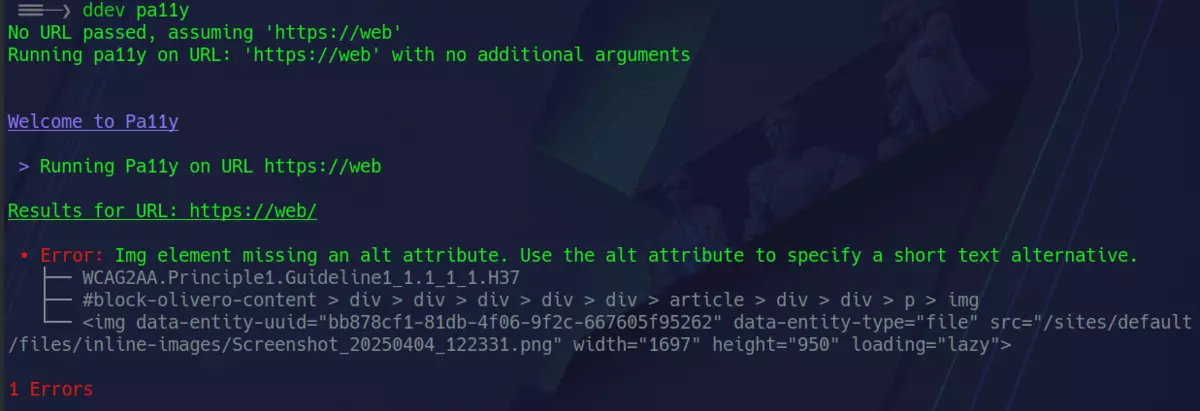

Pa11y is an accessibility tool tool that loads web pages and highlights any accessibility issues it finds. Given that accessibility is increasingly important, not only because we should produce inclusive websites but also because it is a legal requirement, this tool is invaluable.

Aljibe deploys Pa11y preconfigured, so you only have to run one command to scan your home page:

ddev pa11yThe results are almost immediate.

Pa11y found an image without alt text.

Pa11y can be run on any site, not just your project:

ddev pa11y https://random.siteNewman

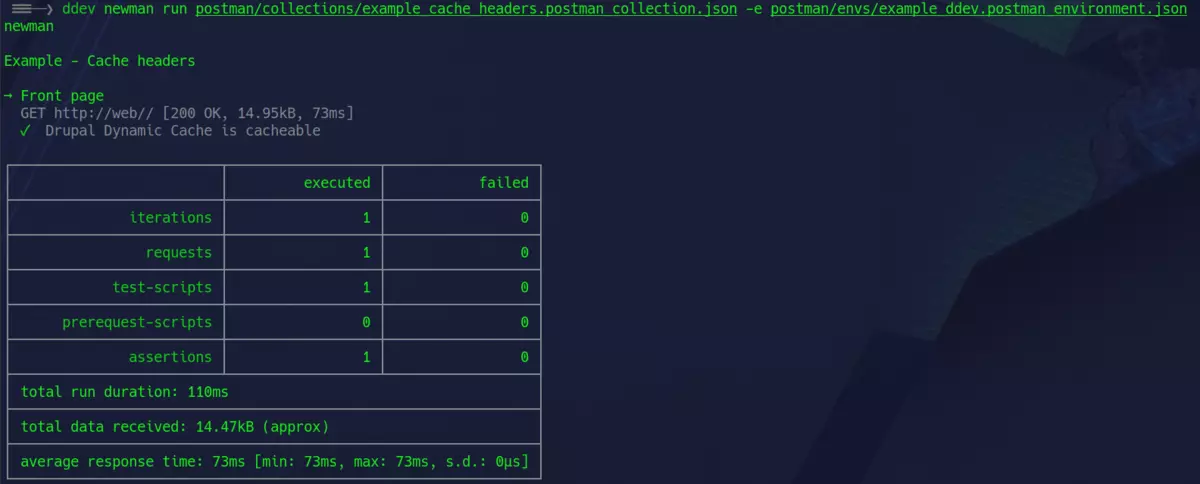

Sometimes you need to test APIs, and Newman is a good choice for that. Essentially, it's a CLI tool that can execute Postman collections and report any unexpected results. Aljibe provides a container for Newman and an example to get you started with testing. The example is interesting: it tests that the site's Dynamic Page Cache is working. You can test other headers, or even the site's API, if the site offers one.

Running it requires some parameters because there are no sane defaults—Newman requires a collection and an environment to run. To run the provided example, try this:

ddev newman run postman/collections/example_cache_headers.postman_collection.json -e postman/envs/example_ddev.postman_environment.jsonNewman will execute the collection and display a clear table at the end.

A simple Newman collection run.

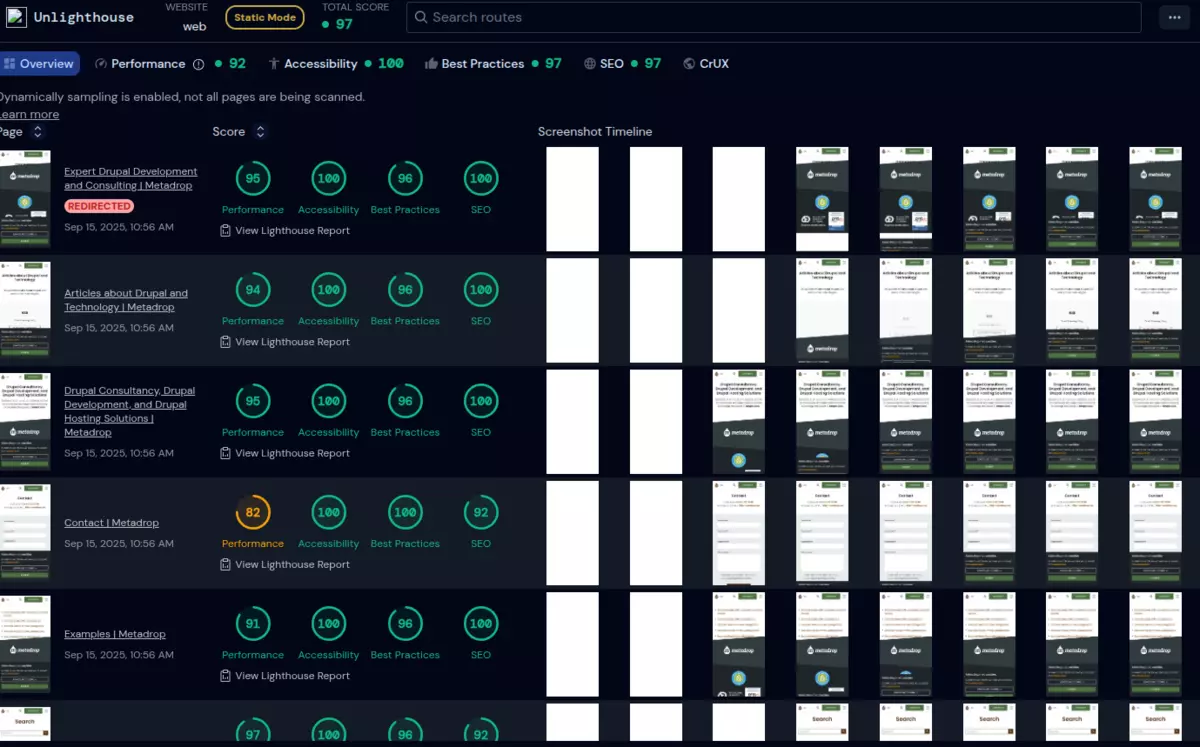

Unlighthouse

Lighthouse is an automated auditing, performance metrics, and best practices tool for the web that can be found in Chromium-based browsers. While it's a good tool, it only scans one URL. Unlighthouse discovers pages on your site and runs Lighthouse on each discovered URL, offering a briefing report on all the results.

An Unlighthouse report example.

Unlighthouse offers ways to limit the number of pages it visits and also automatically detects common patterns to avoid hammering your site with URLs that may have similar Lighthouse scores.

You can run Unlighthouse simply by typing this:

ddev unlighthouseAt the end of the execution, Unlighthouse keeps running because the report it offers needs to be served through a web server. Once you've finished checking the report, you can stop it.

For CI/CD systems, the command unlighthouse-ci is provided. It's almost the same as the previous command, but Unlighthouse generates the report and stops without serving it. Because CI/CD systems usually allow you to serve artefacts—and the report is an artefact—you'll be able to browse the report.

Test environments and smoke tests

The testing tools Aljibe provides are configured so you can have different test environments, meaning different testing configurations per environment. You'd usually test everything on every environment, especially before production, to make sure nothing is broken. However, sometimes there are subtle differences that need to be considered.

This opens the door to smoke tests. What if the deployment process failed and something on your production site is badly broken? Have you ever manually browsed the site after a deployment just to be absolutely sure everything is in place? I guess we all have at some point. But guess what—you can automate that. You can run a different set of tests on the production site using the configuration for the production environment. For example, you could run BackstopJS to make sure the site renders properly. Or, run Behat on the site's search functionality to be sure users can still search the site.

These tests, called smoke tests, don't thoroughly test the entire site. They just test the most obvious and critical errors, such as the home page, searching, or page rendering. They don't change the site and are similar to what you'd do manually to make sure the site is working.

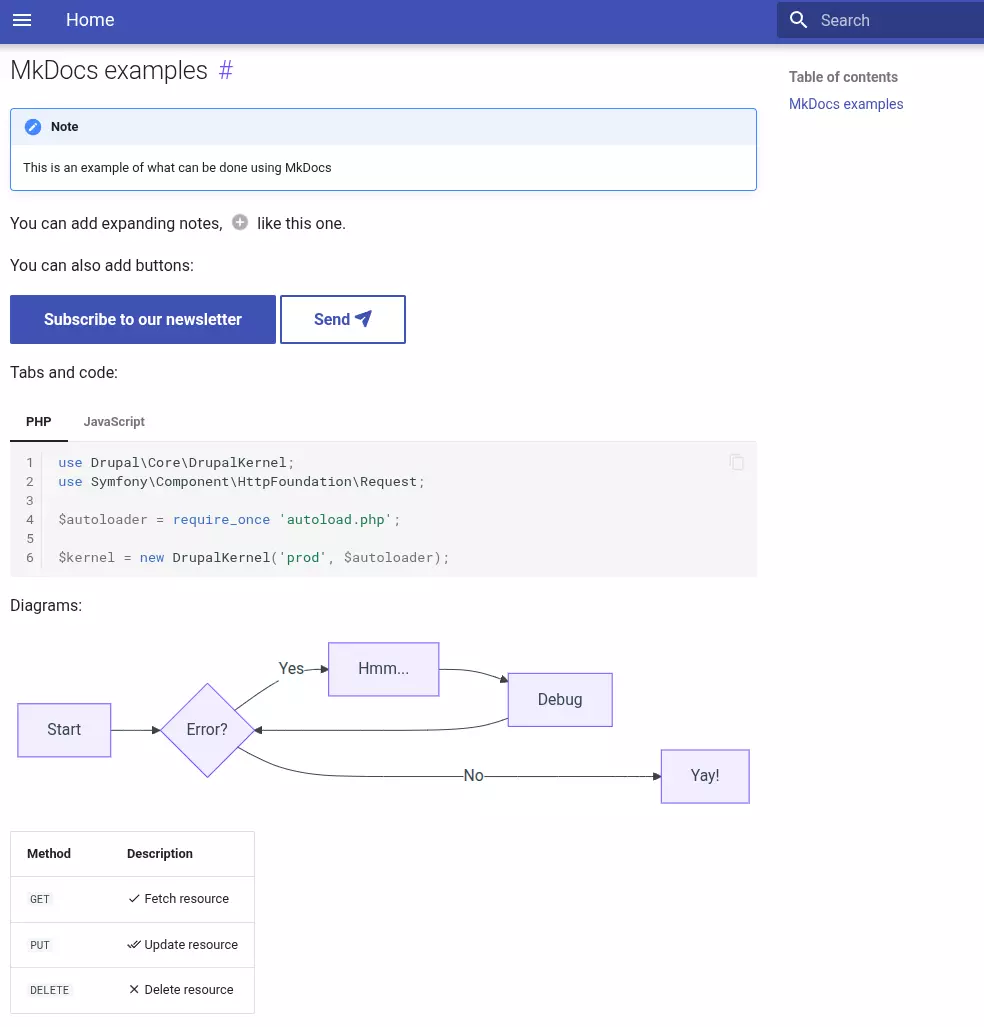

Documentation

This is a fundamental part of a project, which is often overlooked. One problem is documentation being scattered across different applications and resources. While there are many ways to organise documentation, especially for big projects with multiple types of users—from developers and DevOps to editors and marketers—our approach is to keep technical documentation about the project tied to the source code, within the repository. This documentation can cover architecture, environment setup, operations, or any other useful information for the DevOps and developer staff.

This is why Aljibe ships MkDocs with Material. This provides a powerful documentation tool that is very simple to use. It offers Markdown syntax plus many other features, including syntax highlighting, tabs, image formatting, Mermaid diagrams, and search functionality.

Some examples of the MkDocs Material capabilities.

When DDEV starts the project, this documentation system, based on MkDocs, is up and ready to be consulted or updated.

Onboarding

That's a tough moment. You join an existing project that's been developed for months and have little idea of how it works. In many places, this means you have to talk to your co-workers to understand how to set up your local environment and what magical tech tricks are required to get the site up and running locally.

For this reason, Aljibe provides some commands to ease this process, along with a documentation system.

The site-install command sets up the site using either the Drupal configuration in the config folder or a database dump. In the first case, you don't even need a parameter—just run:

ddev site-installIn the second case, you need to pass the path to a database dump.

ddev site-intall /path/to/db/dumpIn both cases, the project is started using DDEV, any possible previous database is dropped, the front end is built using npm, and configuration files, such as settings.local.php, are copied to the right places. Aljibe even offers a hook system in case the project needs to run special commands at certain stages: before and after setting up, before and after a site install, and before and after the installation from configuration or a database dump.

When I have to jump into a project, I just clone the repository and run ddev setup, and most of the time I get a working project with no effort.

The setup command uses other commands, like site-install and frontend, under the hood. The former performs the actual installation, while the latter uses npm to compile themes.

Installing from configuration

This probably deserves a separate article, but at Metadrop we tend to test and develop sites using a site installed from its configuration. This allows for faster set-up times because loading a database dump can be time-consuming. It also means we don't have to worry about sanitising database dumps or handling sensitive data; we simply don't manage delicate data. This is why Aljibe prioritises setting up a site from its configuration. And because you always need some content we rely on Default Content module to create that dummy content.

Of course, sometimes an obscure bug appears that can only be reproduced with real data. In that case, with the proper security measures, loading a database dump is the way to go.

Other tools

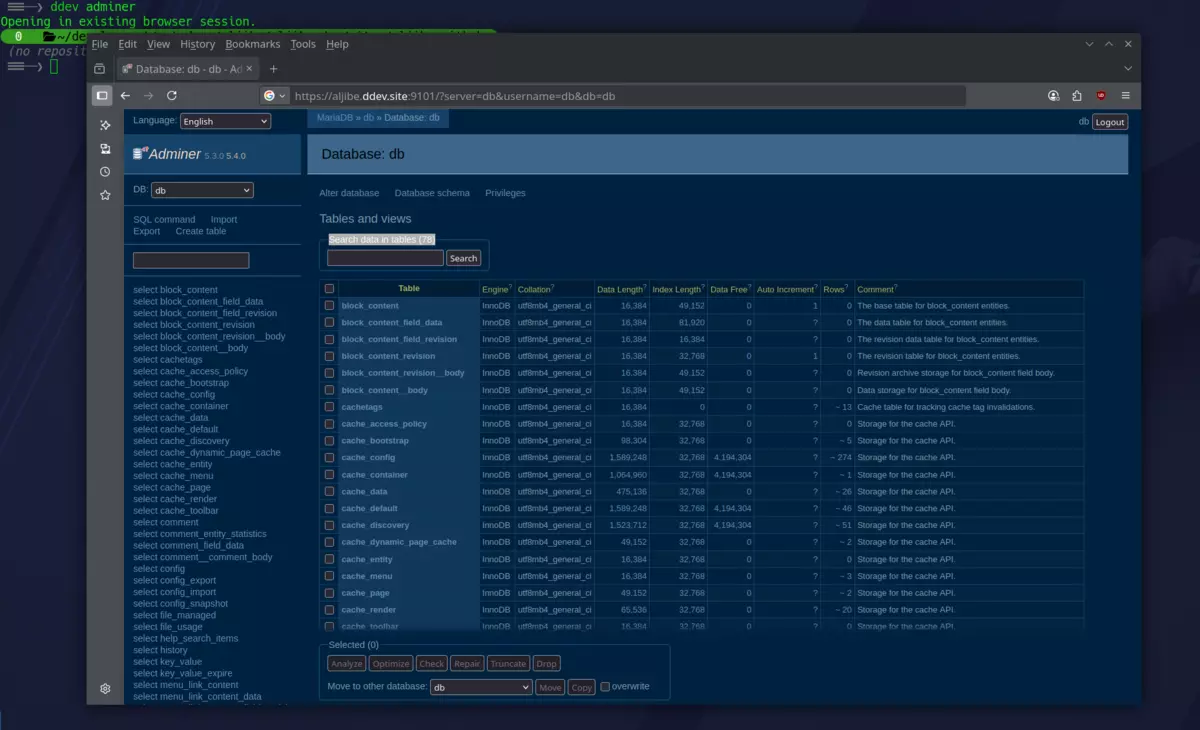

Wait, there's more! Automatically update Drupal core and extensions? Sure! Multisite support? Yes, why not? A database GUI? You got it!

Automatic updates

Updating a Drupal site is a tedious task that needs to be done frequently. The good news is if you have good test coverage, you can automate this process. We developed Drupal Updater and have been using it for over two years. It's a PHP package that automatically updates Drupal core, modules, themes and dependencies. It automatically creates a branch containing all the updates. If the tests pass, these updates can be safely merged into the main branch, updating your project almost effortlessly. If you're curious about this, you can read more in the article:Drupal Updater: Streamlining Drupal Maintenance Updates from CLI.

And, of course, it's included in Aljibe. To update your site, just run:

ddev exec drupal-updaterYou may be thinking of the Automatic Updates module. While this module is a great idea, I think it's more focused on less experienced users. Also, it doesn't support minor core updates by default (though it can be enabled), and updating contributed modules and themes is still experimental. Drupal Updater is stable, tested, and overcomes these limitations. It is much more developer oriented, though.

Mulitsite support

Aljibe supports multisite projects. Commands can be run targeting one site, or the tools used can be configured for different sites. It even provides a command to create a new database in the database container:

ddev create-database second_siteAdminer

When you need to browse the database, a UI is sometimes handy. Aljibe ships with Adminer, which provides a web interface supporting MariaDB, MySQL, PostgreSQL, and more.

Accessing it is as simple as running a command:

ddev adminerThis will launch your local browser with the appropriate parameters to seamlessly log in to the database:

How to use it?

Follow these simple steps.

First, initialise DDEV in an empty folder:

ddev config --autoThen, install Aljibe:

ddev add-on get metadrop/ddev-aljibeFinally, run the Aljibe assistant, which will ask you some questions and set everything up:

ddev aljibe-assistantYou may be wondering: can I use it in existing projects? And the answer is: yes! There are more steps, but it's not hard at all. See the "Add Aljibe to existing project" section in the Aljibe GitHub repository.

Future plans for Aljibe

Aljibe condenses most of our way of developing projects. Some people may find that it doesn't fit their needs, which is why we want to make it more flexible. For example, Aljibe also installs Redis, but this isn't always required. We plan to use the new DDEV dynamic dependencies system to only install these containers if a user really wants them. We think this is key if we want more people to use Aljibe.

It also installs Artisan and creates a subtheme based on it. This usual for us, but as much as we love Artisan we understand this should be an optional step.

Additionally, as we're recently using Hoppscotch, the (mostly) Open Source alternative to Postman, we may add a new DDEV add-on for running Hoppscotch collections, similar to the Newman add-on.

The idea is to continue developing Aljibe while listening to what other people from the Drupal Community think, to make it more appealing as a local development tool. We would love to hear from other teams using Aljibe, and we're convinced that it can be a very useful tool for many. So, if you think the functionalities it offers can help you, give it a try and tell us what you thought.