Introduction

Bot-generated traffic on the internet has grown significantly, negatively affecting website performance and increasing operational costs.

A notable example is the recent report from Wikimedia (How crawlers impact the operations of the Wikimedia projects), it states that 65% of their most costly traffic comes from bots.

What Are the Causes?

- Expansion of Artificial Intelligence: AI tools have made it easier to create bots, including malicious ones, which can now bypass security systems more effectively.

- Crawlers for Model Training: These bots access websites at scale to collect data, even from less popular or deep pages, consuming significant resources.

- Growth of Malicious Bots: Activities such as brute-force attacks, vulnerability scanning, and API exploitation increase the load and risk on websites.

What Is the Impact?

- Performance: Bots consume server resources, slowing down load times and potentially causing outages.

- Distorted Analytics: Non-human traffic skews key metrics used for decision-making.

- Security: Increased exposure to data breaches, DDoS attacks, and other threats.

- Costs: Higher use of bandwidth and infrastructure resources.

- Infrastructure: Persistent bot traffic puts sustained pressure on hosting systems.

Mitigation strategies

1. Guide bots

The simplest way to start controlling bot access is by properly configuring the robots.txt file.

Although many malicious bots ignore robots.txt, it remains a useful tool for guiding legitimate crawlers, such as Googlebot, GPTBot, and others.

In Drupal, two essential modules help manage this process:

- Simple XML Sitemap: allows you to easily define which content should be included in the sitemap.xml. This helps direct bots to relevant content.

- RobotsTxt: enables you to manage and edit the robots.txt file directly from the Drupal admin interface.

A recommended but often overlooked practice is to block bots from accessing internal search result pages (such as "/search"). These pages can generate hundreds of different URLs due to filters and combinations, without offering valuable new content for indexing.

All relevant content for bots should be included in the sitemap.xml, so these additional pages can be safely excluded without affecting search engine visibility.

For example, the "/search" paths can be blocked using the following configuration:

# Example for multilingual sites or sites with language prefixes

Disallow: /*/search

Disallow: /*/search?*

Disallow: /*/search/

Disallow: /*/search/*

# Example for a multilingual site without language prefixes

Disallow: /search

Disallow: /search?*

Disallow: /search/

Disallow: /search/*2. Cache policy

A well-implemented cache policy is key to reducing the impact of bot traffic. It is especially effective when combined with a proxy cache (such as Varnish) and/or a CDN (such as Cloudflare, Akamai, Fastly, CDNetworks, etc.). These layers can deliver cached content—including pages and static assets—without sending requests to Drupal.

There are two core principles:

- Cache as much as possible: This includes all site pages and static resources such as images, JavaScript, and CSS files.

- Apply effective purging to avoid serving outdated content:

- Use cache tag-based purging or URL-based purging, especially for assets like images.

- Set high max-age header values wherever feasible.

- Take into account all external caching layers outside of Drupal, such as the proxy cache (e.g., Varnish) and the CDN.

- Use modules such as Purge and its ecosystem to integrate and manage multiple cache layers.

Views can make caching more complex in two common scenarios:

- Use of Ajax: in Drupal 10, Views that use AJAX requests do not generate cacheable responses. This prevents them from being stored across caching layers. This limitation is being addressed in Drupal 11 (Make ViewAjaxResponse cacheable).

- Filters with many combinations: even without AJAX, filters can generate hundreds of URLs with different query parameters. This reduces overall caching effectiveness.

For the first case, custom solutions can be implemented within Drupal to improve caching behavior for AJAX-based Views.

For the second case, the Drupal module Facet Bot Blocker can help reduce the impact of excessive URL combinations. However, the project documentation recommends blocking bots at the CDN level instead of relying solely on the module.

Therefore, the most effective way to address both scenarios is to implement bot-blocking strategies at the CDN level, when available. We will discuss this in more detail later.

3. CDNs

CDNs provide several important advantages for controlling and filtering traffic before it reaches Drupal:

- Distributed caching: improves load times and reduces pressure on the origin server.

- Web Application Firewall (WAF) functionality: filters malicious traffic using pattern-based rules.

- DDoS mitigation: by detecting and blocking large-scale attacks before they reach the server.

Most CDNs include preconfigured systems to detect and block malicious bots. However, in this section, we will focus on tools that require specific configuration—beyond the default options offered by providers.

There are several CDN providers in the market, such as CloudFlare, Akamai, Fastly, and CDNetworks. The available tools and strategies can vary depending on the provider.

3.1. CDN-Level Caching

Caching content at the CDN level—even before it reaches the proxy cache (such as Varnish)—is a highly effective strategy. However, it is essential to have a well-implemented cache purging policy. One of the most common issues is outdated content caused by poor purge management.

3.2. Bot Management

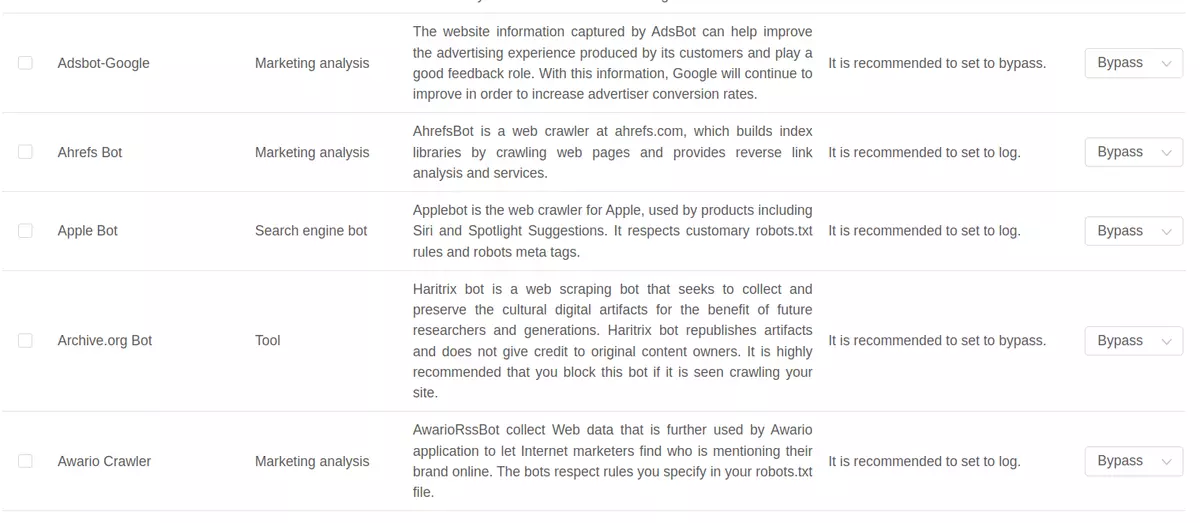

Some CDN providers maintain a catalog of known bots that can be either blocked or exempted from certain rules. It is important to review this list and adjust it according to your site’s needs. For example, the SEO team may use an analytics tool that depends on a specific bot. In that case, you must ensure that the bot is not blocked.

3.3. Blocking by User-Agent

For bots not included in standard catalogs, you can create custom rules based on the User-Agent. It is recommended to use regular expressions to target only the bot identifier, without including variable details such as version numbers.

For example:

^.*Scrapy.*This rule will detect and block any request made by the Scrapy bot.

3.4. Blocking by URI

Many 404 errors in Drupal logs are caused by bots scanning for common vulnerabilities, such as .env files, database backups (backup.sql), or paths related to other CMS platforms like WordPress.

These requests can be blocked using regular expressions such as:

/wp-.*\.php$

\.(php|backup|env|yml|php4|bak|sql)$It is recommended to regularly review 404 error logs to identify new patterns and expand these blocking rules.

3.5. Rate Limiting

Some malicious bots avoid detection by using fake desktop User-Agent strings. However, they can still be identified by their access frequency. CDN and WAF tools allow you to limit the number of requests per IP within a defined time frame.

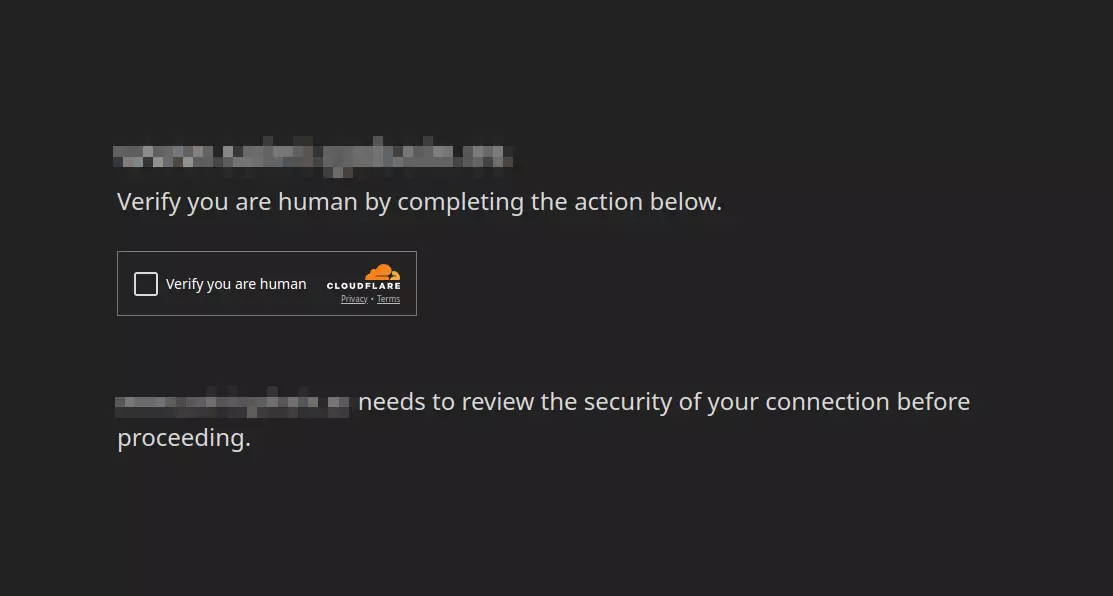

A reasonable threshold is between 150 and 250 requests per minute per IP. Instead of blocking immediately, you can configure a challenge (e.g., CAPTCHA) to verify whether the visitor is human (see the following section). Alternatively, you may temporarily block the IP if necessary.

3.6. Challenges (CAPTCHAs)

As mentioned in the caching section, some pages are not cacheable—or even if they are (such as Views with many filters), they may generate too many variants to be effective against bots.

In these cases, applying a challenge is the best solution. It is recommended to limit challenges to problematic pages only. These systems typically allow you to define a validation duration—for example, one month. This means the user sees the challenge only once during that period, minimizing the impact on user experience while effectively blocking more sophisticated bots.

This strategy should be used only in edge cases, as it involves a redirect and the loss of the referrer header, which can have side effects.

3.7. Exceptions

Not all bots are malicious:

- Legitimate search engines use bots to index the web.

- AI systems use bots not only for training purposes but also to generate search results.

- Monitoring and testing tools may also rely on bots.

Therefore, it is essential to identify legitimate bots and define exception rules in the CDN to ensure they can access the website.

4. Alert system

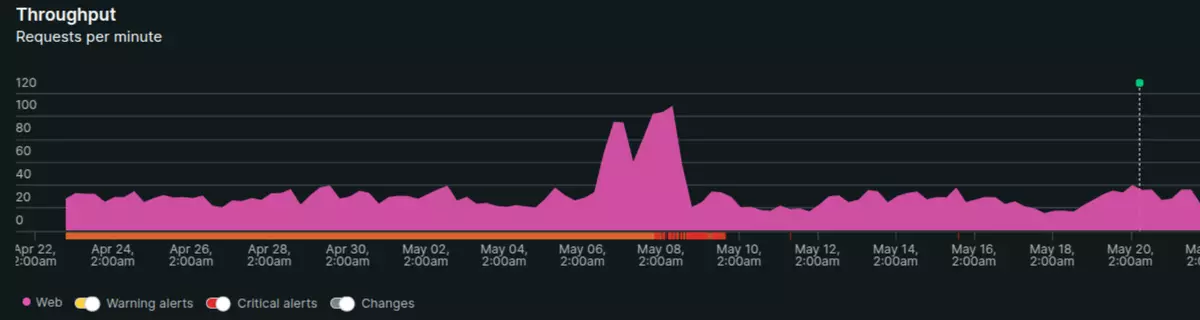

It is difficult to configure a system that is completely immune to bot traffic. Even with a well-secured architecture, regressions can occur due to development errors or unintentional configuration changes. For example, a new deployment might introduce non-cacheable pages, or a poorly applied change could weaken a protection layer.

To minimize the impact of these situations, early detection is critical. It is strongly recommended to implement monitoring systems with alerts that trigger when unusual traffic patterns are detected in Drupal.

Monitoring tools such as New Relic or with Grafana allow you to set up alerts based on key indicators—such as sustained increases in CPU usage or the number of transactions. These behaviors may indicate bot activity and enable a quick response.

Conclusion

A well-defined and properly maintained cache policy is the first key mechanism to reduce the impact of bot traffic. Caching both dynamic and static content—along with an effective purge strategy—prevents many requests from reaching Drupal, helping to protect site performance. Integration with tools like Varnish or CDNs further strengthens this layer, improving speed and efficiency.

However, caching alone is not enough. Traffic generated by more sophisticated bots requires a multi-layered approach: advanced CDN-level management, custom rules based on User-Agent or URI, and the use of tools such as rate limiting or CAPTCHAs to identify and block malicious or unwanted bots.

It is also important to remember that not all bots are harmful. Legitimate bots—such as those used by search engines, monitoring systems, or testing tools—must be correctly identified and managed through exception rules at the CDN level, ensuring they have access without compromising performance or security.

When applied together, these measures offer effective and sustainable control over automated traffic.